Connect to Custom AI Provider

Connect to a custom AI provider and use their models for AI-powered apps and automations in Retool.

AI resource integrations are rolling out on cloud instances and will be available for self-hosted instances in a future release. Continue to use the Retool AI resource with Custom AI Provider models if this feature isn't yet available for your organization.

You can create a custom AI provider resource so you can connect to any AI service with an OpenAI-compatible API, enabling integration with self-hosted models, specialized AI providers, or proprietary endpoints. This resource supports connections to services like Ollama, LocalAI, vLLM, or any custom inference server that implements the OpenAI API specification.

What you can do with Custom AI Provider

With a OpenAI resource, you can interact with LLMs to perform various actions, including:

Text actions

| Action | Description |

|---|---|

| Generate text | Create original text content based on your prompt. Use for content generation, creative writing, code generation, and general-purpose text tasks. |

| Summarize text | Condense long-form content into concise summaries. Specify the input text and desired summary format. |

| Classify text | Categorize text into predefined categories. Provide the text to classify and the possible categories. |

| Extract entity from text | Extract specific information from unstructured text. Define what entities or data points to extract (names, dates, amounts, etc.). |

Chat actions

| Action | Description |

|---|---|

| Generate chat response | Create contextual responses in multi-turn conversations. The AI model considers previous messages to maintain conversation flow. |

Image actions

| Action | Description |

|---|---|

| Generate text from image | Analyze images and generate descriptive text. Upload an image or provide an image URL, then ask questions or request analysis. |

| Caption image | Generate descriptive captions for images. Provide an image and optionally specify the caption style or focus. |

| Extract entity from image | Extract specific information from images (text, objects, data). Available with vision-capable models. |

| Generate image | Create images from text descriptions using DALL·E models. Specify image size, style, and quality parameters. |

Available actions depend on your AI service's capabilities. A custom AI provider works with any OpenAI-compatible API, but specific features may vary based on your service implementation.

Supported models

A custom AI provider supports any model accessible through an OpenAI-compatible API. You can connect to:

Popular open-source models:

- Llama 3.3, 3.2, 3.1 (Meta).

- Mistral 7B, Mixtral 8x7B (Mistral AI).

- Phi-4, Phi-3 (Microsoft).

- Gemma 2 (Google).

- Qwen 2.5 (Alibaba).

- DeepSeek V3 (DeepSeek).

Inference platforms:

- Ollama - Local model deployment.

- LocalAI - Self-hosted inference server.

- vLLM - High-throughput serving.

- Text Generation Inference - Hugging Face serving.

- LM Studio - Desktop inference application.

Custom services:

- Proprietary models with OpenAI-compatible APIs.

- Fine-tuned models hosted on your infrastructure.

- Specialized AI services (coding, translation, etc.).

When creating queries, specify the model identifier as configured in your AI service (e.g., llama3.3:70b, mistral-7b-instruct, my-custom-model).

Your AI service must implement the OpenAI Chat Completions API format. Most modern inference servers support this standard. Verify compatibility with your service's documentation.

Before you begin

Before creating a Custom AI Provider resource, you need:

- Retool permissions: Ability to create and manage resources in your Retool organization.

- AI service endpoint: A running AI service with an OpenAI-compatible API (e.g.,

http://localhost:11434,https://inference.example.com). - API key (if required): Authentication credentials for your AI service, if it requires authentication.

- Model names: The names or identifiers of available models on your AI service.

- Network access: For self-hosted Retool, ensure network connectivity to your AI service. For Retool Cloud, the service must be publicly accessible or use an SSH tunnel.

Create a Custom AI Provider resource

Create a resource to connect Retool to your custom AI service and configure authentication. Once connected, you can then select it when writing queries to make use of its available models.

Follow these steps to create a Custom AI Provider resource in Retool.

1. Create a new resource

Navigate to Resources in the main navigation and click Create new > Resource. Search for Custom AI Provider. Then, click the Custom AI Provider tile to create a new resource.

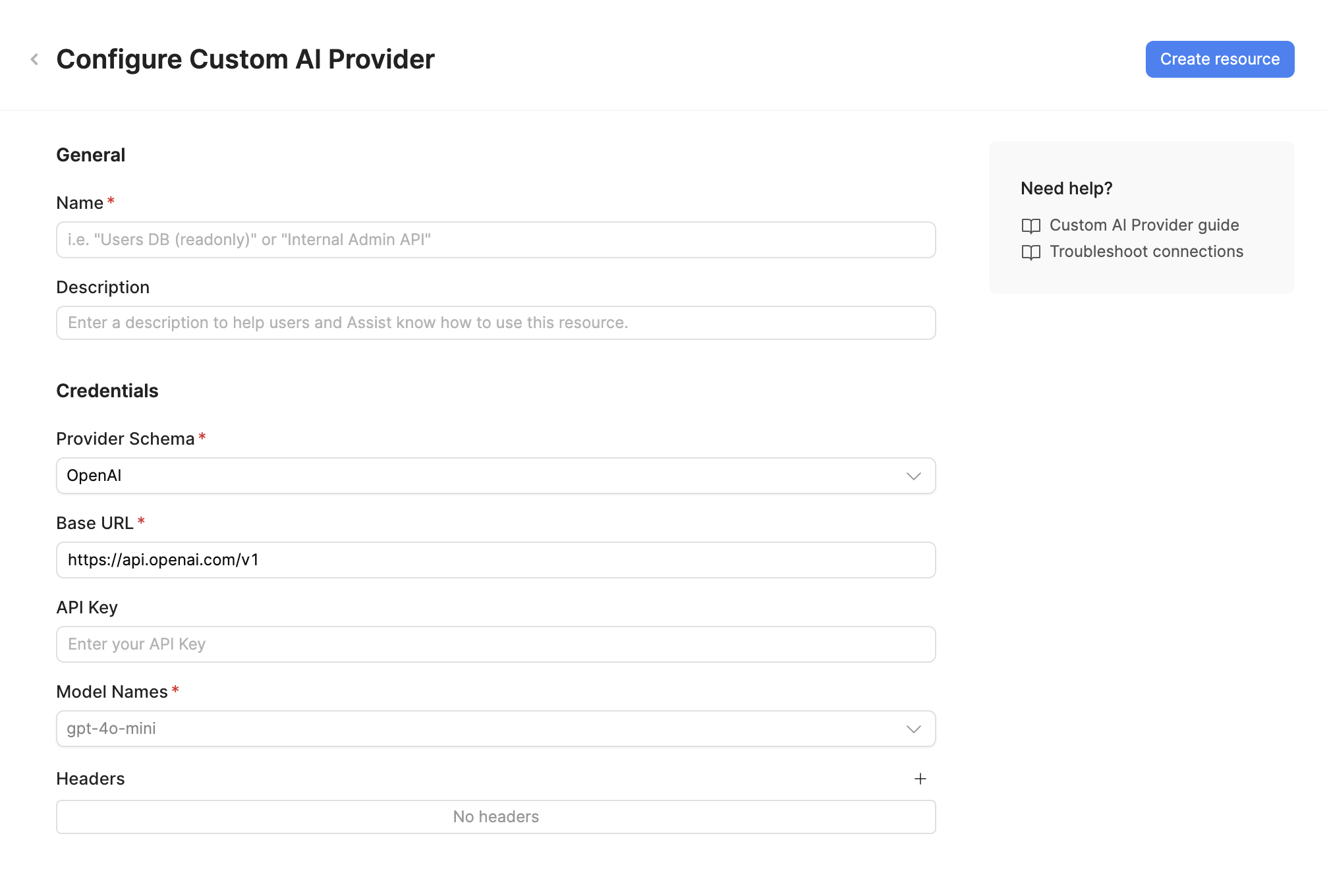

2. Configure connection settings

Specify a name for the resource that identifies it within your organization. Include a description that can provide more context to users and Assist about how to use the resource.

3. Configure authentication

Custom AI Provider resources require an endpoint URL and optional authentication.

- Base URL: The base URL of your AI service API endpoint (e.g.,

http://localhost:11434/v1,https://inference.example.com/v1). The URL should point to the base path that implements the OpenAI-compatible API. - API Key (optional): Authentication key for your AI service. Leave blank if your service doesn't require authentication.

- Compatible Schema: Select the API schema your service implements. Options include:

- OpenAI: Standard OpenAI API format (most common)

- Anthropic: Anthropic Claude API format

- Google: Google Gemini API format

- Cohere: Cohere API format

- Model Names: Specify the model identifiers available from your AI service. Enter model names as they are configured in your service (e.g.,

llama3.3:70b,mistral-7b-instruct,my-custom-model). These model names will be available when creating queries. - Headers (optional): Custom HTTP headers to include with requests. Add headers as key-value pairs if required by your service.

For local development, you can connect to services running on localhost. For production deployments, ensure your AI service has a stable URL accessible from your Retool instance.

For Retool Cloud connecting to on-premises services, configure an SSH tunnel or use a VPN connection.

4. Save the resource

Click Create resource to save your Custom AI Provider resource. The resource is now available for use in apps and workflows.

AI resources do not have a Test connection button. Verify your configuration by creating a query and running it in your app or workflow.

Query Custom AI Provider data

Create queries against your Custom AI Provider resource to interact with self-hosted or specialized AI models in your Retool apps and workflows.

Create a query

In your Retool app or workflow, create a new query and select your Custom AI Provider resource from the resource dropdown. Choose an action type to configure what you want the model to do.

Action types

Custom AI Provider resources support the following action types:

Text actions

Process and generate text using your AI service's language capabilities.

| Action | Description |

|---|---|

| Generate text | Create original text content based on your prompt. Use for content generation, creative writing, code generation, and general-purpose text tasks. |

| Summarize text | Condense long-form content into concise summaries. Specify the input text and desired summary format. |

| Classify text | Categorize text into predefined categories. Provide the text to classify and the possible categories. |

| Extract entity from text | Extract specific information from unstructured text. Define what entities or data points to extract (names, dates, amounts, etc.). |

Chat actions

Generate conversational responses based on message history and context.

| Action | Description |

|---|---|

| Generate chat response | Create contextual responses in multi-turn conversations. The AI model considers previous messages to maintain conversation flow. |

Available actions depend on your AI service's capabilities. A custom AI provider works with any OpenAI-compatible API, but specific features may vary based on your service implementation.

Configuration options

You can configure the following options when querying a Custom AI Provider resource.

| Option | Description |

|---|---|

| Model | The model identifier as configured in your AI service (e.g., llama3.3:70b, mistral-7b-instruct, my-custom-model). Available models depend on your service configuration. |

| Temperature | Determines the randomness in responses. Accepts a decimal number between 0.0 to 1.0 (or 2.0 for some models). Range and behavior depend on your AI service. Lower values are more focused and deterministic. Medium values are balanced. Higher values are more creative. Not all models support temperature control. |

| System message | Provides detailed instructions for the model's behavior. Use it to define the model's role, response format, or domain expertise. The system message only needs to be defined once and applies to all interactions. It's useful for keeping longer instruction sets separate from the prompt or other values. |

| Prompt | The main input or question for the model. Can use {{ }} dynamic expressions to reference component values or query data. |

Not all models provide support for controlling temperature. If you select a model that does not use this option, Retool removes it from the query editor.

You are a helpful SQL expert. Provide concise, accurate answers with code examples when relevant.

Analyze this feedback: {{ feedbackTextarea.value }}

Common use cases

The following examples demonstrate typical Custom AI Provider integrations in Retool apps.

Internal knowledge base assistant

Create an assistant that answers questions using your organization's internal documentation.

Query example

| Setting | Value |

|---|---|

| Model | llama2:13b |

| Temperature | 0.3 |

You are a company knowledge base assistant. Answer questions using the provided context from internal documentation. If you're not certain about something, say so. Always cite the source document in your response.

Context from documentation:

{{ searchResults.data.map(doc => doc.content).join('\n\n---\n\n') }}

Question: {{ userQuestion.value }}

Provide a helpful answer based on the context above. Include the document title in your citation.

This pattern works well with Retool Vector Store or other semantic search capabilities to retrieve relevant documentation before querying the AI.

Code review and suggestions

Use a locally-hosted code model to review and suggest improvements.

Query example

| Setting | Value |

|---|---|

| Model | codellama:13b |

| Temperature | 0.2 |

You are a code review assistant. Analyze code for: bugs, security issues, performance problems, and style improvements. Provide specific, actionable feedback with examples.

Review this code and provide feedback:

Language: {{ languageSelect.value }}

Code:

{{ codeEditor.value }}

Provide:

1. Issues found (if any)

2. Security concerns (if any)

3. Performance suggestions

4. Code quality improvements

Display the feedback in a Text component or Rich Text Editor.

Domain-specific document analysis

Analyze documents using a model fine-tuned for your specific domain.

Query example

| Setting | Value |

|---|---|

| Model | custom-legal-model |

| Temperature | 0.2 |

You are a legal document analyzer. Extract key clauses, obligations, dates, and parties from contracts. Identify potential risks or unusual terms.

Analyze this contract and extract:

1. Parties involved

2. Key obligations for each party

3. Important dates (effective date, termination, renewal)

4. Payment terms

5. Termination conditions

6. Notable or unusual clauses

Contract:

{{ contractText.value }}

Use this with File Input components to upload contracts and a Table component to display extracted information.

Manufacturing process optimization

Analyze production data using a model trained on manufacturing processes.

Query example

| Setting | Value |

|---|---|

| Model | mistral:7b |

| Temperature | 0.4 |

You are a manufacturing process analyst. Analyze production data to identify inefficiencies, bottlenecks, and optimization opportunities. Focus on practical, implementable improvements.

Analyze this production data:

Cycle times: {{ cycleTimesTable.data }}

Defect rates: {{ defectRatesTable.data }}

Equipment utilization: {{ utilizationChart.data }}

Provide:

1. Key performance metrics summary

2. Identified bottlenecks

3. Root cause analysis for top issues

4. Optimization recommendations with expected impact

Private data processing

Process sensitive data while maintaining complete data sovereignty.

Query example

| Setting | Value |

|---|---|

| Model | phi3:mini |

| Temperature | 0.1 |

Extract structured information from the provided text and return ONLY valid JSON. No explanations or additional text. Never include actual PII in the response, only the structure.

Extract the following information from this customer record and return as JSON:

- customer_type (individual/business)

- industry

- account_status

- risk_level

- requires_review (boolean)

Do not include any PII (names, addresses, IDs, etc.)

Record:

{{ customerRecord.value }}

This approach allows you to analyze sensitive data without sending it to third-party AI services.